CodecFlow: Crypto x Robotics

''In 30 years, a robot will likely be on the cover of Time magazine as the best CEO. Machines will do what human beings are incapable of doing. Machines will partner and cooperate with humans, rather than become mankind's biggest enemy."

This quote, by Jack Ma, founder of Alibaba, showcases the potential of robotics and AI. The reality is that robotics are the future. Noticeably, we have been witnessing a rise in expectations of funds making thesis around this phenomenon within crypto itself. 2 years ago, we started bullposting about AI projects and how this new trend would result in multiples like DeFi and NFTs did. It resulted in the first wave of AI projects being born, like Bittensor, Virtuals and ElizaOS taking the spotlights. The second wave of multiples could be produced within that same category (AI):

Robotics.

Context: case studies

Every person who works, has worked, or will work eventually encounters repetitive and monotonous tasks. No industry is untouched, from technology and financial services to healthcare and retail, the problem is universal. These tasks have far-reaching consequences, affecting not only the mental and physical well-being of employees, but also overall efficiency within the organization and ultimately its financial performance.

Studies in cognitive psychology show that performance declines the longer someone remains engaged in the same activity, an effect known as time-on-task. A clear example is the vigilance decrement, where the ability to detect important events (or “signals”) drops over time. In the classic Mackworth Clock Test, these signals were small deviations in the movement of a clock hand, an extra jump that appeared only occasionally and had to be reported by the participant. Detection rates fell by 10 to 15 percent within just the first 30 minutes of continuous monitoring. More recent research quantifies this effect in complex visual tasks. A 2022 study published in Frontiers in Psychology asked participants to watch a constant stream of shapes and symbols on a screen. Most items were irrelevant, but occasionally a specific target shape appeared (the signal) requiring an immediate response. After three hours of this repetitive task, reaction times to the targets increased by 5.2 percent and missed targets rose by 71 percent. This demonstrates that repetitive, prolonged work slows responses to critical events and greatly increases the risk of missing them altogether.

A lack of motivation and engagement in the workplace affects far more than the well-being of employees, as it also has a measurable impact on overall business performance. Reduced productivity, higher error rates, and greater staff turnover are all linked to motivation issues, and these factors directly influence both revenue and profitability. According to Gallup (a global analytics and advisory firm), disengaged employees cost the global economy around 8.8 trillion dollars each year, an amount equal to 9 percent of global GDP. In contrast, organizations with high employee engagement generate on average twice the revenue growth of those with lower engagement levels. Public companies in the highest engagement tier report a 28 percent increase in earnings per share over a 12-month period, whereas those with low engagement experience an average decline of 11 percent in the same timeframe. Companies with the most satisfied employees outperform the S&P 500 by 20 percent and the Nasdaq by 30 percent, demonstrating that a motivated workforce not only strengthens business results but also enhances long-term market value.

Even though these studies are mostly based on employees within a company, the point of showcasing the context is that repetitive work has been scientifically proven to diminish returns on productivity.

These studies prove why robotics are extremely important and the worth the hype: repetitive work gets replaced by robotics as they perform faster and better. Humans can focus on high value activities, helping to preserve motivation, reduce burnout risk, and ultimately improve both productivity and financial performance.

AI and Robotics: the issue

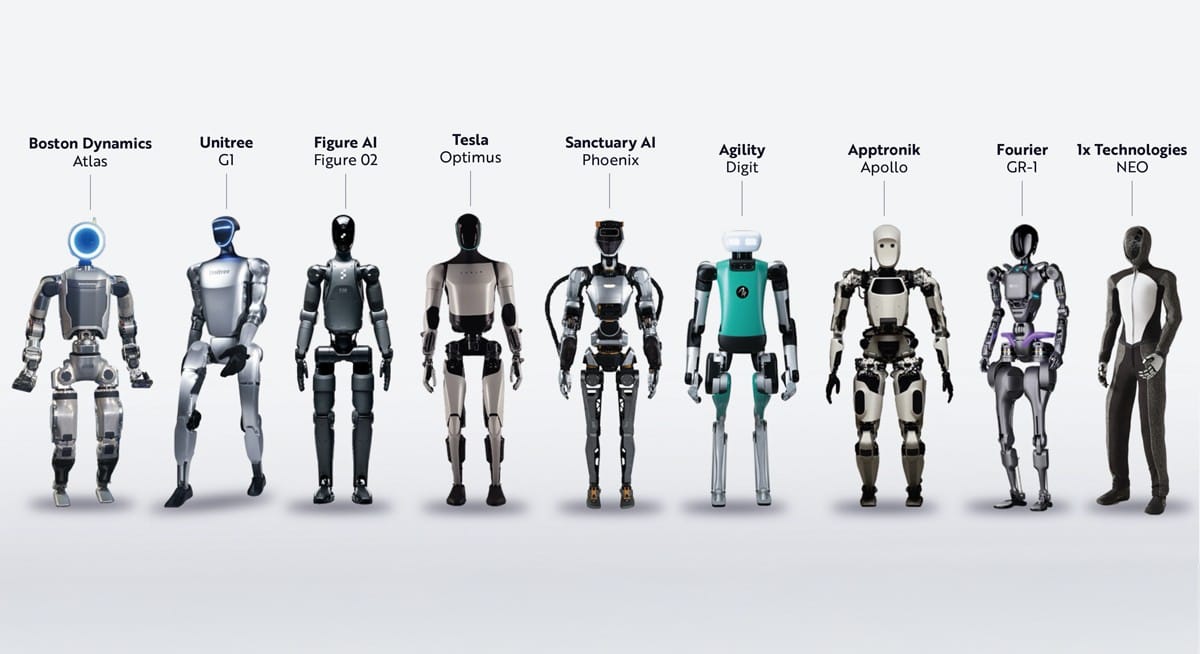

There are 2 ways to interpret 'robotics':

- Humanoid robotics (performing repetitive physical tasks)

- Digital robotics (AI digital robot performing repetitive digital tasks)

We will focus on the last part for this article. This is where we believe will be the next opportunity as it fits the digital world crypto is in.

There is one issue currently with automated digital robotics.

Traditional large language models (LLMs) operate purely on text. They generate output by predicting the most likely sequence of words based on patterns in language data. While this can produce fluent and convincing responses, it doesn’t reflect any real understanding of context or the situation at hand. LLMs have no ability to perceive their surroundings; they rely entirely on user input as their reference point.

Because of this, they lack contextual awareness. They can’t interpret what’s happening on a screen, track how an interface is being used, or adjust their behavior when something in the environment changes unless extra systems are added to help them. Take a case where a user interface is updated: if a “Submit” button is renamed to “Send” or moved elsewhere on the page, the LLM won’t notice. It will behave as if the interface is unchanged, which can result in incorrect responses or failed actions.

LLMs don’t natively interact with visual interfaces. So when a UI changes, they don’t "break" in the literal sense but they simply remain unaware of the change, because they have no way of observing it.

Enter CodecFlow.

CodecFlow: the first digital robotic

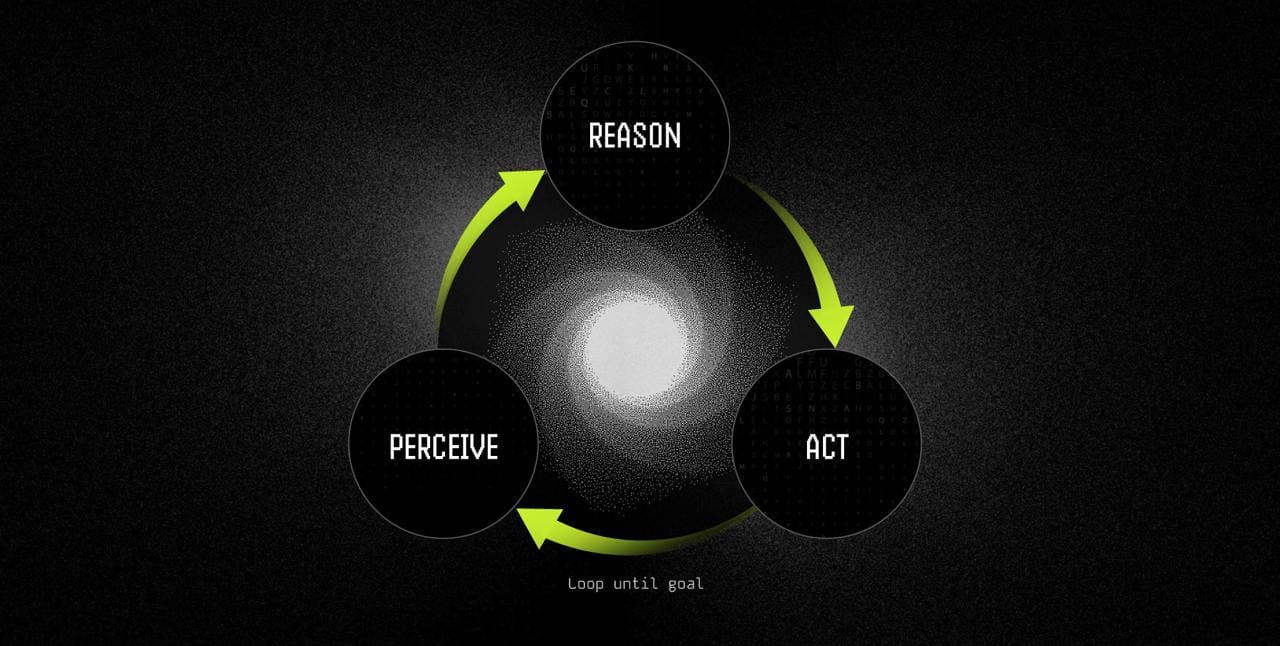

CodecFlow solves this issue through the use of their AI operators. These operators are autonomous software agents which perform tasks through a continuous perceive-reason-act cycle. They gather information from their surroundings through sensor data, screenshots or camera feeds (perception). They then process the observations and instructions using vision-language models (reasoning) and execute the decisions through UI interactions or hardware control (action).

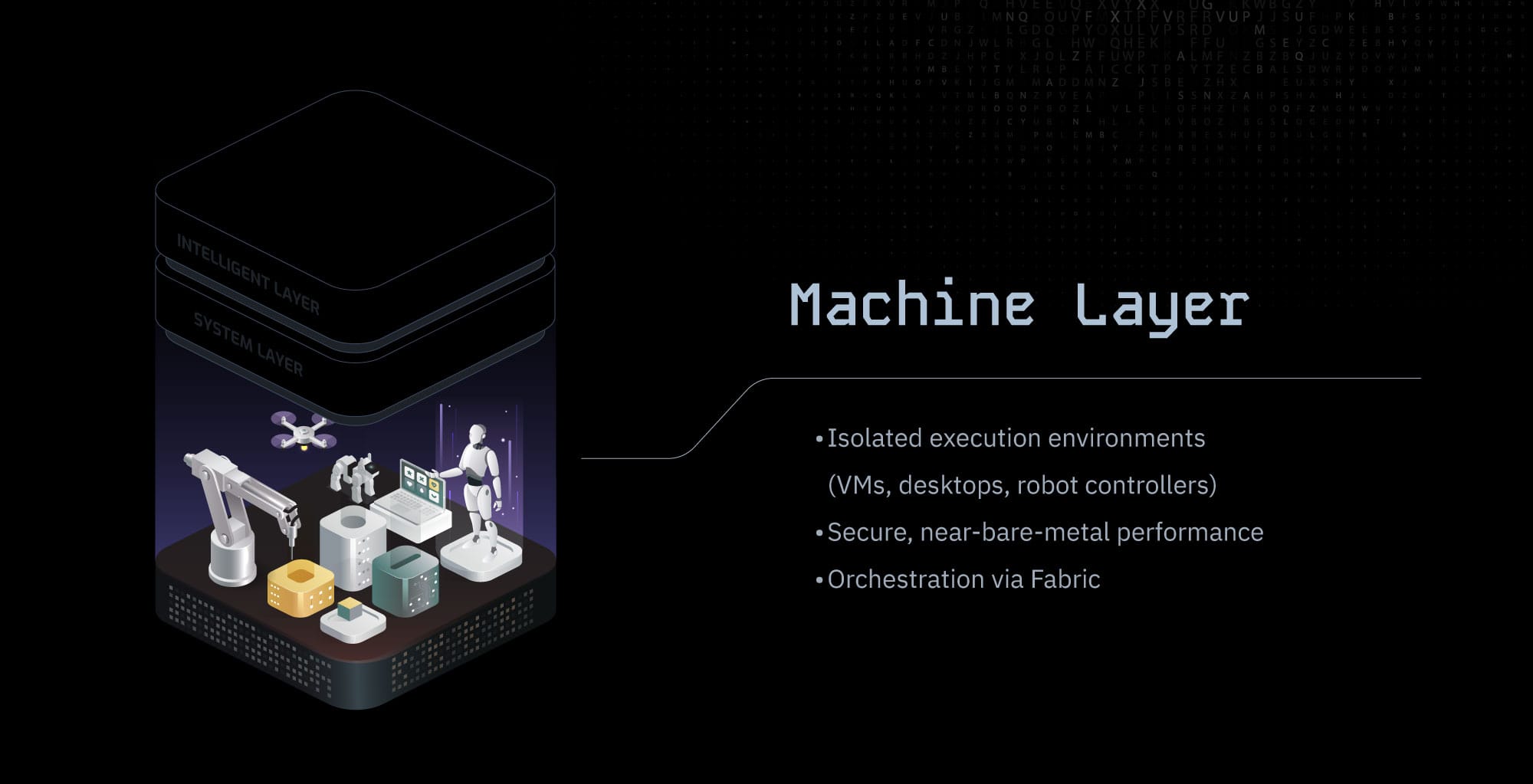

CodecFlow uses a layered architecture with three different tiers: Machine, System, and Intelligence. All these tiers are designed to facilitate specific aspects of the cycles of the platform.

Machine Layer

The Machine layer is the base layer that supports and powers all Operators within the CodecFlow system. Each Operator runs in its own virtual machine, container or microVM, completely separated from others to guarantee both security and autonomy. In addition to these environments, they can operate directly on physical robots or specialized hardware, treating these devices as their host machines.

Because every Operator runs in its own environment, each machine is fully sandboxed. To further strengthen security, TEEs can be used to protect sensitive code and data. The platform also relies on standard virtualization and container-based isolation techniques to ensure it’s impossible for one Operator to interfere with another or the host system. This modular approach minimizes potential damage in the event of a breach or malfunction.

System Layer

The System Layer prepares the necessary environments and enables two-way communication between Operators and their target machines. When deployed, the system makes sure the right operating environment is provided. This includes desktop operating systems like Windows, Linux or macOS, virtual mobile devices, and software stacks on specialized hardware such as robot controllers.

CodecFlow’s tooling is designed to seamlessly fit their Operators into their environment. They accomplish this by establishing real-time communication using CodecFlow’s custom WebRTC implementation. Desktop displays or robot camera feeds are captured with low-latency video streams and sent back to the Operator, enabling real-time monitoring.

At the same time, the system can simulate mouse clicks, movements, scrolling and keystrokes across Windows, Linux or macOS, without separate coding for each operating system. In other words, the system provides unified input emulation.

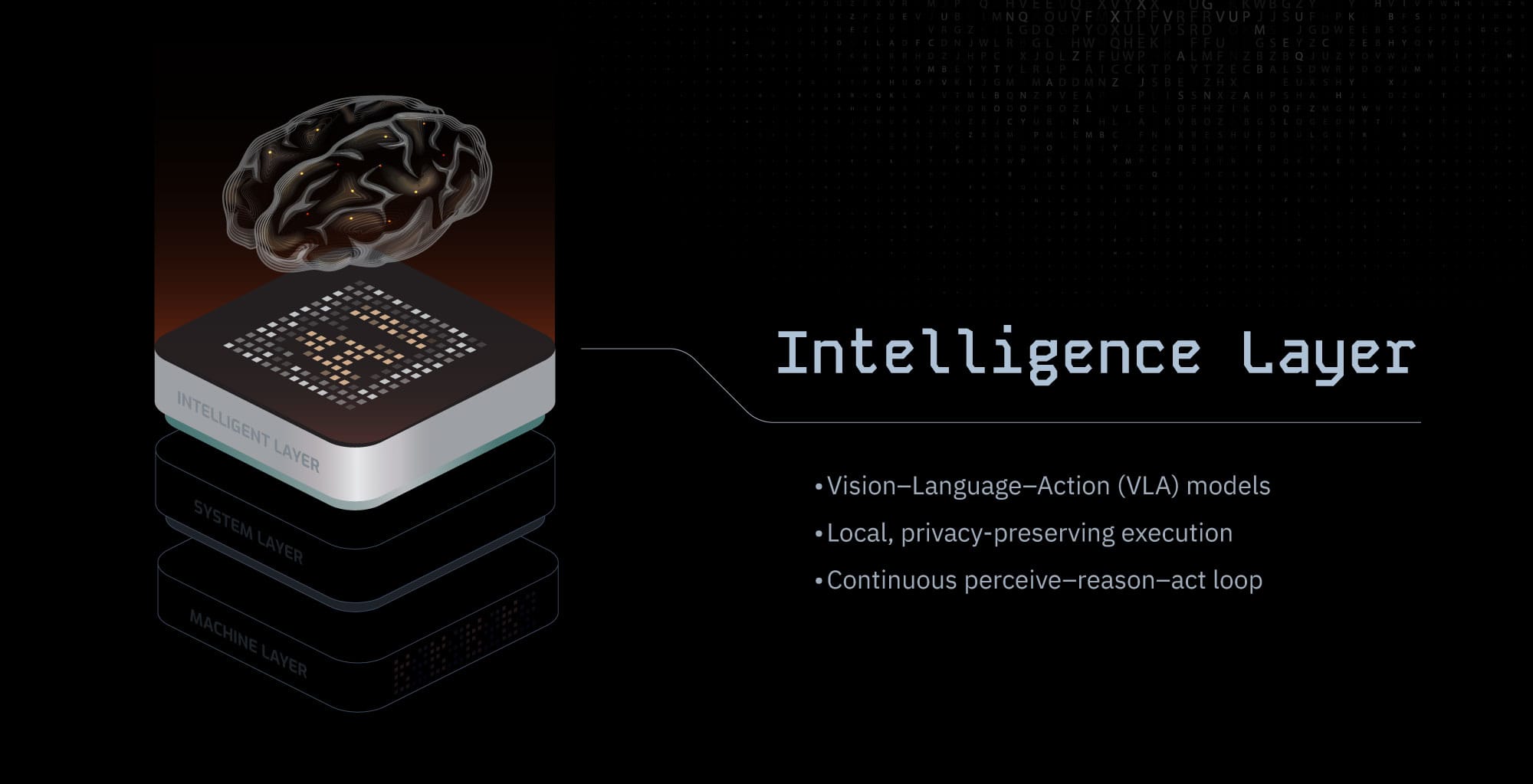

Intelligence Layer

The Intelligence Layer is where the decision making of each Operator is done. Like mentioned before, CodecFlow sets itself apart by using Vision-Language-Action (VLA) models that interpret both visual input and natural language instructions. This allows actions to be carried out directly on the device, without needing help from external systems.

When running, the Operators continuously go through a cycle of a few steps:

- Capture observations

- Receive instructions

- Process these through the VLA model to determine actions

- Execute the actions

- Observe the results

The Operator connects language to what it sees on screen, allowing it to understand the current context. As a result, it can identify buttons, read on-screen content, and interpret camera feeds to respond appropriately to the situation in real time. This leads to faster response times, improved privacy, and consistent performance.

While the VLA model plays a central role in powering Operators, other modern AI techniques can also be used to train and refine them. Research shows that combining language and visual understanding leads to more flexible and capable behavior. By allowing one or multiple models to form the Operator's decision-making core, the system can be fine-tuned so it adapts smoothly to different tasks and operating environments.

Building Operators

Another unique feature of CodecFlow is the ability to create Operators without writing code, making it accessible to a larger user base. While normally only those with coding skills can write an Operator script, CodecFlow allows anyone to train the AI through a web dashboard, even without any programming knowledge. Non-technical users can simply record a video or capture their screen while performing a task and upload it to the platform. After labeling the steps, the platform uses imitation learning to train the Operator to replicate precisely what is shown in the video.

Screen recording works in a similar way to techniques that capture keystrokes or mouse movements to document a workflow. Both methods produce a detailed outline of the process, which can then be used to teach the Operator to perform the same actions. Once trained, the Operator can be refined through user feedback and adjustments, gradually improving its performance until it works perfectly.

For users with a technical background, CodecFlow offers a more in-depth option for building Operators. Using the platform’s software development kits, they can write custom code, integrate third-party APIs, and use datasets to fine-tune how an Operator behaves. This opens the door to creating more complex workflows that go beyond what is possible through demonstration-based training. While training by example is fast and straightforward, it cannot always match the precision and depth of control that coding provides.

What makes this approach even more powerful is that Operators in CodecFlow work in a hybrid mode once deployed. They automatically choose the most effective way to complete a task, calling APIs whenever possible but switching smoothly to user interface automation when needed. This combination maximizes both performance and compatibility, allowing Operators to function effectively across many different systems and environments.

Operator Marketplace

In addition to creating Operators for their own use, developers can choose to publish their creations on the CodecFlow Marketplace. This gives others the ability to browse, select, and integrate Operators into their own systems. It encourages the growth of a collaborative community while allowing creators to earn $CODEC in usage fees whenever their Operators are used.

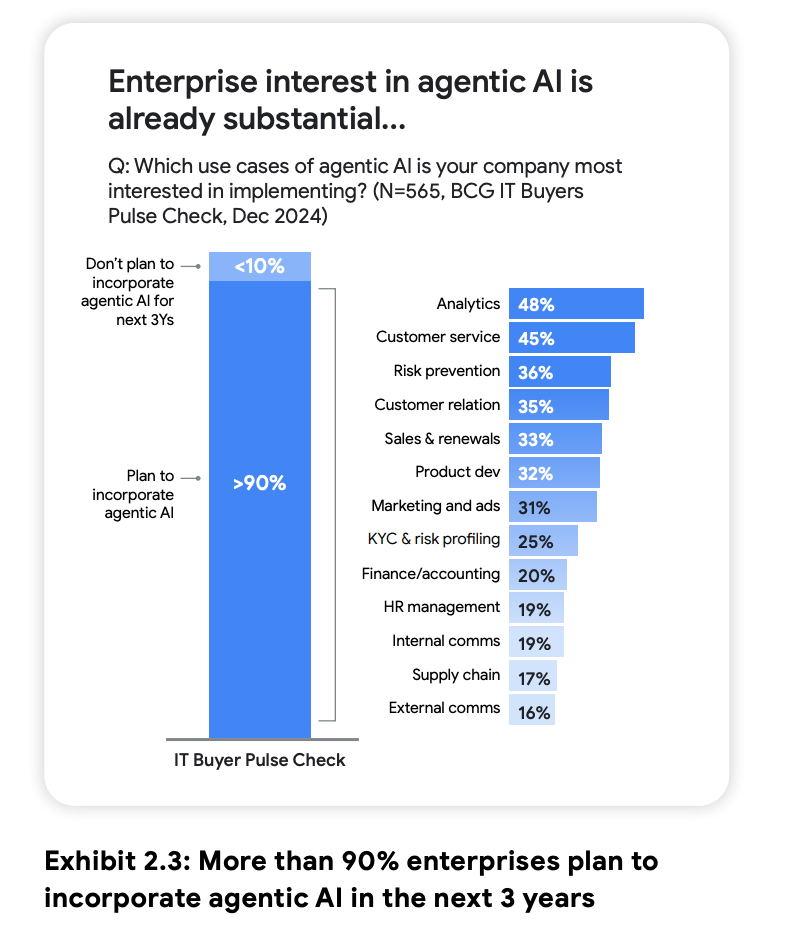

This approach aligns with a wider trend in the AI agent market. A notable example is Google Cloud’s AI Agent Marketplace, where customers can easily find and deploy AI agents from partners. Research from Google shows that over 90% of enterprises are interested in adopting AI agents within the next three years. Their studies also highlight that AI agents have the potential to solve large scale challenges in almost every industry. From financial services and manufacturing to healthcare and retail, AI agents can take on unique roles and deliver targeted value.

Google’s analysis further projects that, in a best case scenario where the market reaches its full potential, the global opportunity for agentic AI could approach $1 trillion. Across industries, there is a strong demand for tailored, domain specific agents, which plays directly to CodecFlow’s strengths. Operators can be fine tuned using different models to suit specific tasks and industry requirements. Because they are model agnostic, they can operate across various systems without modification, making them highly adaptable and widely deployable.

Use cases

The way CodecFlow works can be applied across a wide range of scenarios. As noted earlier, one of the most straightforward applications is workflow automation, essentially functioning as desktop automation. By interacting directly with GUIs, Operators can take over repetitive office and computer tasks, saving users time and reducing the margin for error. This frees people to focus on the work that truly matters, rather than spending hours on repetitive tasks such as routine data entry.

Beyond office environments, Operators can also play a role in gaming. During the development phase of a game, extensive testing is needed to ensure the absence of bugs. An Operator can be deployed to behave like a human player within the virtual world, systematically navigating menus, playing through levels, and testing movement mechanics. As they learn the gameplay, Operators can autonomously adjust their strategy, acting as tireless digital testers. By handling these tasks, they shorten development cycles, enable continuous improvement, and process work faster than any human tester.

This brings us to perhaps the most important use case: robotics. The Machine Layer can connect directly to a robot’s hardware, allowing an Operator to control physical robots in real time. They can receive live camera feeds from the robot and make decisions based on what they see, whether that is moving boxes along a conveyor belt or piloting a drone. In this sense, the Operator acts as both the eyes and the brain of the robot.

Conclusion: CodecFlow as the robotics x crypto trade

The team has shown to be competent with shipping the products in regards to the above. A demo is recently launched which showcases how automated robotics are analysing desktops.

We might know more about humanoid robotics in general but the truth is that there is a multitrillion opportunity as well on the digital robotics side as well. It makes sense that the latter would be the perfect fit to combine with crypto rather than the physical humanoid robotic.

$CODEC is setting up to be one of the (if not the best currently) trade for the 'robotics x crypto' narrative. It launched at a sub 1m marketcap coin at the end of april and has been gaining mindshare on crypto twitter recently. The current marketcap is 27 million and the chart looks pre-parabolic as more investors are aware of the new trend. As more products gets released, $CODEC is positioning itself as a prominent player in the digital robotics narrative.